Hello all,

Huge thanks to the team & community for maintaining this great library. Was hoping you guys might help me find what I’m missing in my implementation below.

I am presently attempting to run tune.run() with a trainable function that includes a Trainer instance from HuggingFace’s transformers library.

The code runs on my local machine, but now I am trying to make use of the ray/project image in order to run distributed training on a Kubernetes cluster.

I used the following Docker file to build my own image on top of the Ray image:

FROM rayproject/ray

# setup core compilers

RUN apt-get update && apt-get -y install gcc && apt-get -y install g++

RUN apt-get install inetutils-ping -y

# copy environment files

ENV PATH ${PATH}:/root/.local/bin

ENV PYTHONPATH ${PYTHONPATH}:/root/.local/bin

ADD requirements.txt /tmp/requirements.txt

RUN pip install --user -r /tmp/requirements.txt

# copy python script

RUN mkdir -p transformers_train

WORKDIR transformers_train

COPY ./ray_transformers.py ./ray_transformers.py

CMD python ray_transformers.py

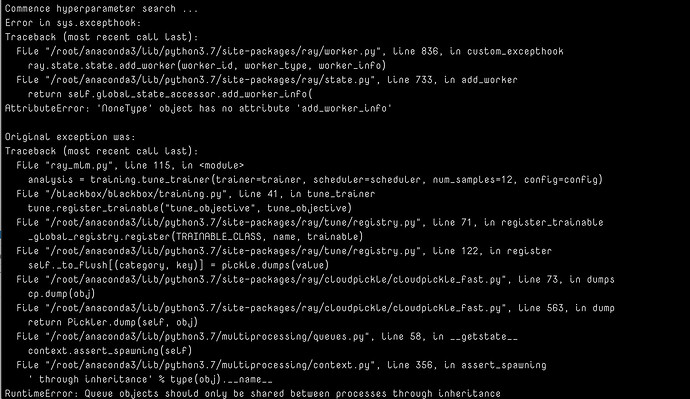

However, upon running the container, I encountered the following error:

The call to tune.run() is as follows:

def tune_trainer(trainer, scheduler, num_samples, config, progress_reporter=None,

use_checkpoints=True):

"""tune a trainer object """

def tune_objective(trial, checkpoint_dir=None):

"""trainable for ray tune"""

model_path = None

stdout.write('DEBUG | Trainer checkpoint usage set to: ' + str(trainer.use_tune_checkpoints) + '\n')

if checkpoint_dir:

stdout.write('DEBUG | Tune checkpoint dir located at ... \n')

stdout.write(checkpoint_dir)

for subdir in os.listdir(checkpoint_dir):

if subdir.startswith(PREFIX_CHECKPOINT_DIR):

model_path = os.path.join(checkpoint_dir, subdir)

trainer.objective = None

trainer.train(model_path=model_path, trial=trial)

setattr(trainer, 'compute_objective', default_compute_objective)

if getattr(trainer, "objective", None) is None:

metrics = trainer.evaluate()

trainer.objective = trainer.compute_objective(metrics)

trainer._tune_save_checkpoint()

tune.report(objective=trainer.objective, **metrics, done=True)

if not progress_reporter:

progress_reporter = CLIReporter(metric_columns=["objective"])

if use_checkpoints:

trainer.use_tune_checkpoints = True

# sync_config = tune.SyncConfig(

# sync_to_driver=NamespacedKubernetesSyncer("ray")

# )

tune.register_trainable("tune_objective", tune_objective)

analysis = tune.run("tune_objective", scheduler=scheduler, num_samples=num_samples, config=config,

progress_reporter=progress_reporter, metric='objective', mode='min')

return analysis

What I’ve Tried

- My first instinct upon following the traceback was to notice that the tune.run() logic, upon noticing my trainable is a function and not an experiment, wraps it around an Experiment instance.

The comments on the run_or_experiment argument mention that if my function is not an experiment, I have to register it with tune.register_trainable(“lambda_id”, lambda x: …) - so I attempted that but received the same error.

-

Alternating between

transformers3.4 & 3.5 -

Adding /root/.local/bin to the

PYTHONPATHvariable as pip informed me (during installation ofrequirement.txt) that some of the packages were being installed there. I suspected some of those packages may not have been called correctly because that directory wasn’t inPYTHONPATH, but the error persisted.

I will obviously keep investigating the issue, but I’m befuddled because the script works fine on my local machine.

It leads me to suspect the cause may be some really obvious environment configuration that I’m missing. If someone has a clue as to what I’m overlooking, I would be hugely grateful.

Much thanks!