1. Severity of the issue: (select one)

None: I’m just curious or want clarification.

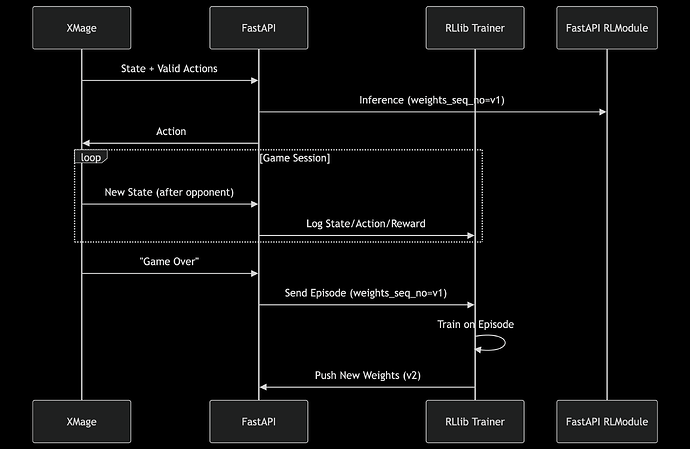

1. Context & Goal

I’m building an RL agent for a complex turn based card game. The current setup:

-

Game engine sends game states (including possible actions) to a FastAPI server.

-

The FastAPI server uses a rule-based system to select actions and returns them to game engine.

Goal: Replace the rule-based system with an RLlib-trained policy.

2. Proposed Architecture

Based on env_connecting_to_rllib_w_tcp_client.py, I plan to:

-

Use FastAPI as the “Client” (replacing

_dummy_client). -

Maintain an RLModule instance in FastAPI for low-latency inference.

-

Use RLlib Trainer for centralized training.

3. Doubts & Questions

Doubt 1: RLModule Sync Workflow

I understand I need:

An RLModule instance in FastAPI (for inference).

An RLModule in RLlib Trainer (for training).

Sync Workflow:

FastAPI uses

weights_seq_no=v1for all inferences in a game.At game end, it sends the trajectory (states/actions/rewards) to RLlib Trainer.

RLlib Trainer trains on the trajectory and pushes updated weights (

weights_seq_no=v2) to FastAPI.Is this correct?

Doubt 2: Weight Versioning with Parallel Games

Imagine two parallel games:

-

Both start with

weights_seq_no=v1. -

Game 1 finishes first → Trainer updates to

v2→ pushes to FastAPI. -

Game 2 (still running) uses

v1until completion.

Questions:

- Is RLlib Trainer with the External Environment smart enough to handle off-policy trajectories (generated with older weights)?

4. Key Concerns

- Scalability: Handling 100+ parallel games with weight versioning. The game engine and FastAPI server could potentially be handling multiple games in parallel and I’d like to use trajectories from all of them for training.

Request

Could you validate this architecture? Specifically:

-

Is the RLlink workflow correct?

-

How should I handle weight versioning for parallel off-policy trajectories?

Thank you for your incredible work! ![]()