Hi guys,

I am trying to get myself familiar with the rllib-MADDPG, so the first thing I did was to reproduce the MPE training results and compare the results with those from the OpenAI’s implementation (i.e., same as this page did).

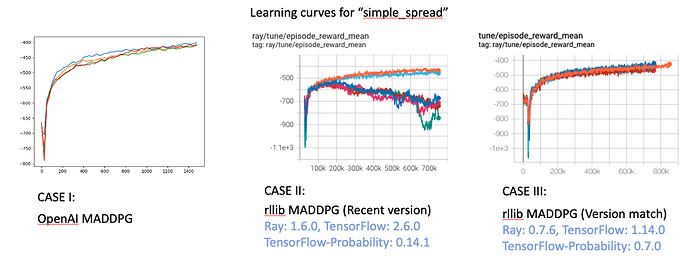

However, I couldn’t get matching results as shown in the previous link using the “simple_spread” MPE scenario. See Case I vs. Case II in the figure below: in six different trials, Case II’s learning curves seem to be less stable, with four of the runs learn with decreasing reward level and the other two look okay.

With a little investigation, I found out that if we use libraries from their earlier version (found here, three most important ones are highlighted in the figure), the learning curves show more stable and consistent learning.

I checked the MPE env I used is exactly the same, also the definition of losses of actors and critics in maddpg policy and all hyper-parameters used are also the same, so not sure why using the more recent rllib version, the learning shows a different behavior. Can anyone please provide some pointers or possible explanations for this? I would love to gain more understanding on this and possibly fix this issue. Thanks!