### Search before asking

- [X] I searched the [issues](https://github.com/ray-p…roject/ray/issues) and found no similar issues.

### Ray Component

RLlib

### What happened + What you expected to happen

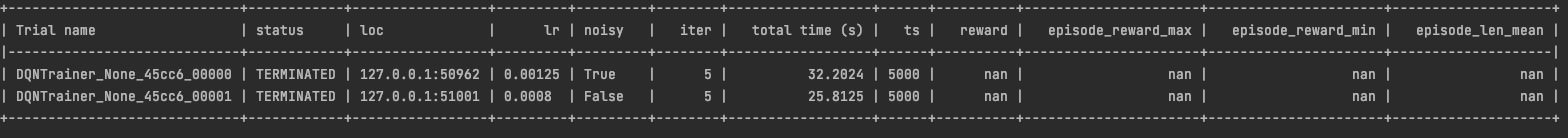

Running the cartpole server and client example generate reports (i.e. result.json and progress.csv) with NaNs for most important metrics, for instance:

```

{"episode_reward_max": NaN, "episode_reward_min": NaN, "episode_reward_mean": NaN, "episode_len_mean": NaN, "episode_media": {}, "episodes_this_iter": 0, "policy_reward_min": {}, "policy_reward_max": {}, "policy_reward_mean": {}, "custom_metrics": {}, "hist_stats": {"episode_reward": [], "episode_lengths": []}, "sampler_perf": {}, "off_policy_estimator": {}, "num_healthy_workers": 0, "timesteps_total": 4000, "timesteps_this_iter": 0, "agent_timesteps_total": 4000, "timers": {"sample_time_ms": 52132.77, "sample_throughput": 76.727, "load_time_ms": 2.515, "load_throughput": 1590257.441, "learn_time_ms": 4094.017, "learn_throughput": 977.036}, "info": {"learner": {"default_policy": {"learner_stats": {"cur_kl_coeff": 0.20000000298023224, "cur_lr": 4.999999873689376e-05, "total_loss": 227.4500274658203, "policy_loss": -0.03600919246673584, "vf_loss": 227.48060607910156, "vf_explained_var": 0.03606006130576134, "kl": 0.027223078534007072, "entropy": 0.6669929027557373, "entropy_coeff": 0.0, "model": {}}, "custom_metrics": {}}}, "num_steps_sampled": 4000, "num_agent_steps_sampled": 4000, "num_steps_trained": 4000, "num_agent_steps_trained": 4000}, "done": false, "episodes_total": 0, "training_iteration": 1, "trial_id": "57526_00000", "experiment_id": "994aa06470444e9685b6fad672b7500c", "date": "2021-12-22_17-42-44", "timestamp": 1640212964, "time_this_iter_s": 56.22953796386719, "time_total_s": 56.22953796386719, "pid": 305610, "hostname": "john-desktop", "node_ip": "192.168.0.193", "config": {"num_workers": 0, "num_envs_per_worker": 1, "create_env_on_driver": false, "rollout_fragment_length": 1000, "batch_mode": "truncate_episodes", "gamma": 0.99, "lr": 5e-05, "train_batch_size": 4000, "model": {"_use_default_native_models": false, "_disable_preprocessor_api": false, "fcnet_hiddens": [256, 256], "fcnet_activation": "tanh", "conv_filters": null, "conv_activation": "relu", "post_fcnet_hiddens": [], "post_fcnet_activation": "relu", "free_log_std": false, "no_final_linear": false, "vf_share_layers": false, "use_lstm": false, "max_seq_len": 20, "lstm_cell_size": 256, "lstm_use_prev_action": false, "lstm_use_prev_reward": false, "_time_major": false, "use_attention": false, "attention_num_transformer_units": 1, "attention_dim": 64, "attention_num_heads": 1, "attention_head_dim": 32, "attention_memory_inference": 50, "attention_memory_training": 50, "attention_position_wise_mlp_dim": 32, "attention_init_gru_gate_bias": 2.0, "attention_use_n_prev_actions": 0, "attention_use_n_prev_rewards": 0, "framestack": true, "dim": 84, "grayscale": false, "zero_mean": true, "custom_model": null, "custom_model_config": {}, "custom_action_dist": null, "custom_preprocessor": null, "lstm_use_prev_action_reward": -1}, "optimizer": {}, "horizon": null, "soft_horizon": false, "no_done_at_end": false, "env": null, "observation_space": "Box([-inf -inf -inf -inf], [inf inf inf inf], (4,), float32)", "action_space": "Discrete(2)", "env_config": {}, "remote_worker_envs": false, "remote_env_batch_wait_ms": 0, "env_task_fn": null, "render_env": false, "record_env": false, "clip_rewards": null, "normalize_actions": true, "clip_actions": false, "preprocessor_pref": "deepmind", "log_level": "INFO", "callbacks": "<class 'ray.rllib.agents.callbacks.DefaultCallbacks'>", "ignore_worker_failures": false, "log_sys_usage": true, "fake_sampler": false, "framework": "tf", "eager_tracing": false, "eager_max_retraces": 20, "explore": true, "exploration_config": {"type": "StochasticSampling"}, "evaluation_interval": null, "evaluation_num_episodes": 10, "evaluation_parallel_to_training": false, "in_evaluation": false, "evaluation_config": {}, "evaluation_num_workers": 0, "custom_eval_function": null, "sample_async": false, "sample_collector": "<class 'ray.rllib.evaluation.collectors.simple_list_collector.SimpleListCollector'>", "observation_filter": "NoFilter", "synchronize_filters": true, "tf_session_args": {"intra_op_parallelism_threads": 2, "inter_op_parallelism_threads": 2, "gpu_options": {"allow_growth": true}, "log_device_placement": false, "device_count": {"CPU": 1}, "allow_soft_placement": true}, "local_tf_session_args": {"intra_op_parallelism_threads": 8, "inter_op_parallelism_threads": 8}, "compress_observations": false, "collect_metrics_timeout": 180, "metrics_smoothing_episodes": 100, "min_iter_time_s": 0, "timesteps_per_iteration": 0, "seed": null, "extra_python_environs_for_driver": {}, "extra_python_environs_for_worker": {}, "num_gpus": 0, "_fake_gpus": false, "num_cpus_per_worker": 1, "num_gpus_per_worker": 0, "custom_resources_per_worker": {}, "num_cpus_for_driver": 1, "placement_strategy": "PACK", "input": "<function _input at 0x7f9fd4161280>", "input_config": {}, "actions_in_input_normalized": false, "input_evaluation": [], "postprocess_inputs": false, "shuffle_buffer_size": 0, "output": null, "output_compress_columns": ["obs", "new_obs"], "output_max_file_size": 67108864, "multiagent": {"policies": {"default_policy": [null, null, null, {}]}, "policy_map_capacity": 100, "policy_map_cache": null, "policy_mapping_fn": null, "policies_to_train": null, "observation_fn": null, "replay_mode": "independent", "count_steps_by": "env_steps"}, "logger_config": null, "_tf_policy_handles_more_than_one_loss": false, "_disable_preprocessor_api": false, "simple_optimizer": false, "monitor": -1, "use_critic": true, "use_gae": true, "lambda": 1.0, "kl_coeff": 0.2, "sgd_minibatch_size": 128, "shuffle_sequences": true, "num_sgd_iter": 30, "lr_schedule": null, "vf_loss_coeff": 1.0, "entropy_coeff": 0.0, "entropy_coeff_schedule": null, "clip_param": 0.3, "vf_clip_param": 10.0, "grad_clip": null, "kl_target": 0.01, "vf_share_layers": -1}, "time_since_restore": 56.22953796386719, "timesteps_since_restore": 0, "iterations_since_restore": 1, "perf": {"cpu_util_percent": 11.541975308641977, "ram_util_percent": 23.590123456790124}}

{"episode_reward_max": NaN, "episode_reward_min": NaN, "episode_reward_mean": NaN, "episode_len_mean": NaN, "episode_media": {}, "episodes_this_iter": 0, "policy_reward_min": {}, "policy_reward_max": {}, "policy_reward_mean": {}, "custom_metrics": {}, "hist_stats": {"episode_reward": [], "episode_lengths": []}, "sampler_perf": {}, "off_policy_estimator": {}, "num_healthy_workers": 0, "timesteps_total": 8000, "timesteps_this_iter": 0, "agent_timesteps_total": 8000, "timers": {"sample_time_ms": 50382.451, "sample_throughput": 79.393, "load_time_ms": 2.965, "load_throughput": 1348921.889, "learn_time_ms": 4066.27, "learn_throughput": 983.703}, "info": {"learner": {"default_policy": {"learner_stats": {"cur_kl_coeff": 0.30000001192092896, "cur_lr": 4.999999873689376e-05, "total_loss": 272.2585144042969, "policy_loss": -0.028621336445212364, "vf_loss": 272.280517578125, "vf_explained_var": 0.136295348405838, "kl": 0.02211819775402546, "entropy": 0.6365824341773987, "entropy_coeff": 0.0, "model": {}}, "custom_metrics": {}}}, "num_steps_sampled": 8000, "num_agent_steps_sampled": 8000, "num_steps_trained": 8000, "num_agent_steps_trained": 8000, "num_steps_trained_this_iter": 0}, "done": false, "episodes_total": 0, "training_iteration": 2, "trial_id": "57526_00000", "experiment_id": "994aa06470444e9685b6fad672b7500c", "date": "2021-12-22_17-43-32", "timestamp": 1640213012, "time_this_iter_s": 48.491934299468994, "time_total_s": 104.72147226333618, "pid": 305610, "hostname": "john-desktop", "node_ip": "192.168.0.193", "config": {"num_workers": 0, "num_envs_per_worker": 1, "create_env_on_driver": false, "rollout_fragment_length": 1000, "batch_mode": "truncate_episodes", "gamma": 0.99, "lr": 5e-05, "train_batch_size": 4000, "model": {"_use_default_native_models": false, "_disable_preprocessor_api": false, "fcnet_hiddens": [256, 256], "fcnet_activation": "tanh", "conv_filters": null, "conv_activation": "relu", "post_fcnet_hiddens": [], "post_fcnet_activation": "relu", "free_log_std": false, "no_final_linear": false, "vf_share_layers": false, "use_lstm": false, "max_seq_len": 20, "lstm_cell_size": 256, "lstm_use_prev_action": false, "lstm_use_prev_reward": false, "_time_major": false, "use_attention": false, "attention_num_transformer_units": 1, "attention_dim": 64, "attention_num_heads": 1, "attention_head_dim": 32, "attention_memory_inference": 50, "attention_memory_training": 50, "attention_position_wise_mlp_dim": 32, "attention_init_gru_gate_bias": 2.0, "attention_use_n_prev_actions": 0, "attention_use_n_prev_rewards": 0, "framestack": true, "dim": 84, "grayscale": false, "zero_mean": true, "custom_model": null, "custom_model_config": {}, "custom_action_dist": null, "custom_preprocessor": null, "lstm_use_prev_action_reward": -1}, "optimizer": {}, "horizon": null, "soft_horizon": false, "no_done_at_end": false, "env": null, "observation_space": "Box([-inf -inf -inf -inf], [inf inf inf inf], (4,), float32)", "action_space": "Discrete(2)", "env_config": {}, "remote_worker_envs": false, "remote_env_batch_wait_ms": 0, "env_task_fn": null, "render_env": false, "record_env": false, "clip_rewards": null, "normalize_actions": true, "clip_actions": false, "preprocessor_pref": "deepmind", "log_level": "INFO", "callbacks": "<class 'ray.rllib.agents.callbacks.DefaultCallbacks'>", "ignore_worker_failures": false, "log_sys_usage": true, "fake_sampler": false, "framework": "tf", "eager_tracing": false, "eager_max_retraces": 20, "explore": true, "exploration_config": {"type": "StochasticSampling"}, "evaluation_interval": null, "evaluation_num_episodes": 10, "evaluation_parallel_to_training": false, "in_evaluation": false, "evaluation_config": {}, "evaluation_num_workers": 0, "custom_eval_function": null, "sample_async": false, "sample_collector": "<class 'ray.rllib.evaluation.collectors.simple_list_collector.SimpleListCollector'>", "observation_filter": "NoFilter", "synchronize_filters": true, "tf_session_args": {"intra_op_parallelism_threads": 2, "inter_op_parallelism_threads": 2, "gpu_options": {"allow_growth": true}, "log_device_placement": false, "device_count": {"CPU": 1}, "allow_soft_placement": true}, "local_tf_session_args": {"intra_op_parallelism_threads": 8, "inter_op_parallelism_threads": 8}, "compress_observations": false, "collect_metrics_timeout": 180, "metrics_smoothing_episodes": 100, "min_iter_time_s": 0, "timesteps_per_iteration": 0, "seed": null, "extra_python_environs_for_driver": {}, "extra_python_environs_for_worker": {}, "num_gpus": 0, "_fake_gpus": false, "num_cpus_per_worker": 1, "num_gpus_per_worker": 0, "custom_resources_per_worker": {}, "num_cpus_for_driver": 1, "placement_strategy": "PACK", "input": "<function _input at 0x7f9f8827c1f0>", "input_config": {}, "actions_in_input_normalized": false, "input_evaluation": [], "postprocess_inputs": false, "shuffle_buffer_size": 0, "output": null, "output_compress_columns": ["obs", "new_obs"], "output_max_file_size": 67108864, "multiagent": {"policies": {"default_policy": [null, null, null, {}]}, "policy_map_capacity": 100, "policy_map_cache": null, "policy_mapping_fn": null, "policies_to_train": null, "observation_fn": null, "replay_mode": "independent", "count_steps_by": "env_steps"}, "logger_config": null, "_tf_policy_handles_more_than_one_loss": false, "_disable_preprocessor_api": false, "simple_optimizer": false, "monitor": -1, "use_critic": true, "use_gae": true, "lambda": 1.0, "kl_coeff": 0.2, "sgd_minibatch_size": 128, "shuffle_sequences": true, "num_sgd_iter": 30, "lr_schedule": null, "vf_loss_coeff": 1.0, "entropy_coeff": 0.0, "entropy_coeff_schedule": null, "clip_param": 0.3, "vf_clip_param": 10.0, "grad_clip": null, "kl_target": 0.01, "vf_share_layers": -1}, "time_since_restore": 104.72147226333618, "timesteps_since_restore": 0, "iterations_since_restore": 2, "perf": {"cpu_util_percent": 12.408695652173913, "ram_util_percent": 24.214492753623198}}

{"episode_reward_max": NaN, "episode_reward_min": NaN, "episode_reward_mean": NaN, "episode_len_mean": NaN, "episode_media": {}, "episodes_this_iter": 0, "policy_reward_min": {}, "policy_reward_max": {}, "policy_reward_mean": {}, "custom_metrics": {}, "hist_stats": {"episode_reward": [], "episode_lengths": []}, "sampler_perf": {}, "off_policy_estimator": {}, "num_healthy_workers": 0, "timesteps_total": 12000, "timesteps_this_iter": 0, "agent_timesteps_total": 12000, "timers": {"sample_time_ms": 49611.128, "sample_throughput": 80.627, "load_time_ms": 2.783, "load_throughput": 1437554.21, "learn_time_ms": 4015.917, "learn_throughput": 996.037}, "info": {"learner": {"default_policy": {"learner_stats": {"cur_kl_coeff": 0.44999998807907104, "cur_lr": 4.999999873689376e-05, "total_loss": 647.337646484375, "policy_loss": -0.028608763590455055, "vf_loss": 647.359130859375, "vf_explained_var": 0.1282048523426056, "kl": 0.015827370807528496, "entropy": 0.5923504829406738, "entropy_coeff": 0.0, "model": {}}, "custom_metrics": {}}}, "num_steps_sampled": 12000, "num_agent_steps_sampled": 12000, "num_steps_trained": 12000, "num_agent_steps_trained": 12000, "num_steps_trained_this_iter": 0}, "done": false, "episodes_total": 0, "training_iteration": 3, "trial_id": "57526_00000", "experiment_id": "994aa06470444e9685b6fad672b7500c", "date": "2021-12-22_17-44-20", "timestamp": 1640213060, "time_this_iter_s": 47.8851535320282, "time_total_s": 152.60662579536438, "pid": 305610, "hostname": "john-desktop", "node_ip": "192.168.0.193", "config": {"num_workers": 0, "num_envs_per_worker": 1, "create_env_on_driver": false, "rollout_fragment_length": 1000, "batch_mode": "truncate_episodes", "gamma": 0.99, "lr": 5e-05, "train_batch_size": 4000, "model": {"_use_default_native_models": false, "_disable_preprocessor_api": false, "fcnet_hiddens": [256, 256], "fcnet_activation": "tanh", "conv_filters": null, "conv_activation": "relu", "post_fcnet_hiddens": [], "post_fcnet_activation": "relu", "free_log_std": false, "no_final_linear": false, "vf_share_layers": false, "use_lstm": false, "max_seq_len": 20, "lstm_cell_size": 256, "lstm_use_prev_action": false, "lstm_use_prev_reward": false, "_time_major": false, "use_attention": false, "attention_num_transformer_units": 1, "attention_dim": 64, "attention_num_heads": 1, "attention_head_dim": 32, "attention_memory_inference": 50, "attention_memory_training": 50, "attention_position_wise_mlp_dim": 32, "attention_init_gru_gate_bias": 2.0, "attention_use_n_prev_actions": 0, "attention_use_n_prev_rewards": 0, "framestack": true, "dim": 84, "grayscale": false, "zero_mean": true, "custom_model": null, "custom_model_config": {}, "custom_action_dist": null, "custom_preprocessor": null, "lstm_use_prev_action_reward": -1}, "optimizer": {}, "horizon": null, "soft_horizon": false, "no_done_at_end": false, "env": null, "observation_space": "Box([-inf -inf -inf -inf], [inf inf inf inf], (4,), float32)", "action_space": "Discrete(2)", "env_config": {}, "remote_worker_envs": false, "remote_env_batch_wait_ms": 0, "env_task_fn": null, "render_env": false, "record_env": false, "clip_rewards": null, "normalize_actions": true, "clip_actions": false, "preprocessor_pref": "deepmind", "log_level": "INFO", "callbacks": "<class 'ray.rllib.agents.callbacks.DefaultCallbacks'>", "ignore_worker_failures": false, "log_sys_usage": true, "fake_sampler": false, "framework": "tf", "eager_tracing": false, "eager_max_retraces": 20, "explore": true, "exploration_config": {"type": "StochasticSampling"}, "evaluation_interval": null, "evaluation_num_episodes": 10, "evaluation_parallel_to_training": false, "in_evaluation": false, "evaluation_config": {}, "evaluation_num_workers": 0, "custom_eval_function": null, "sample_async": false, "sample_collector": "<class 'ray.rllib.evaluation.collectors.simple_list_collector.SimpleListCollector'>", "observation_filter": "NoFilter", "synchronize_filters": true, "tf_session_args": {"intra_op_parallelism_threads": 2, "inter_op_parallelism_threads": 2, "gpu_options": {"allow_growth": true}, "log_device_placement": false, "device_count": {"CPU": 1}, "allow_soft_placement": true}, "local_tf_session_args": {"intra_op_parallelism_threads": 8, "inter_op_parallelism_threads": 8}, "compress_observations": false, "collect_metrics_timeout": 180, "metrics_smoothing_episodes": 100, "min_iter_time_s": 0, "timesteps_per_iteration": 0, "seed": null, "extra_python_environs_for_driver": {}, "extra_python_environs_for_worker": {}, "num_gpus": 0, "_fake_gpus": false, "num_cpus_per_worker": 1, "num_gpus_per_worker": 0, "custom_resources_per_worker": {}, "num_cpus_for_driver": 1, "placement_strategy": "PACK", "input": "<function _input at 0x7f9f882e6280>", "input_config": {}, "actions_in_input_normalized": false, "input_evaluation": [], "postprocess_inputs": false, "shuffle_buffer_size": 0, "output": null, "output_compress_columns": ["obs", "new_obs"], "output_max_file_size": 67108864, "multiagent": {"policies": {"default_policy": [null, null, null, {}]}, "policy_map_capacity": 100, "policy_map_cache": null, "policy_mapping_fn": null, "policies_to_train": null, "observation_fn": null, "replay_mode": "independent", "count_steps_by": "env_steps"}, "logger_config": null, "_tf_policy_handles_more_than_one_loss": false, "_disable_preprocessor_api": false, "simple_optimizer": false, "monitor": -1, "use_critic": true, "use_gae": true, "lambda": 1.0, "kl_coeff": 0.2, "sgd_minibatch_size": 128, "shuffle_sequences": true, "num_sgd_iter": 30, "lr_schedule": null, "vf_loss_coeff": 1.0, "entropy_coeff": 0.0, "entropy_coeff_schedule": null, "clip_param": 0.3, "vf_clip_param": 10.0, "grad_clip": null, "kl_target": 0.01, "vf_share_layers": -1}, "time_since_restore": 152.60662579536438, "timesteps_since_restore": 0, "iterations_since_restore": 3, "perf": {"cpu_util_percent": 12.82205882352941, "ram_util_percent": 24.599999999999998}}

{"episode_reward_max": NaN, "episode_reward_min": NaN, "episode_reward_mean": NaN, "episode_len_mean": NaN, "episode_media": {}, "episodes_this_iter": 0, "policy_reward_min": {}, "policy_reward_max": {}, "policy_reward_mean": {}, "custom_metrics": {}, "hist_stats": {"episode_reward": [], "episode_lengths": []}, "sampler_perf": {}, "off_policy_estimator": {}, "num_healthy_workers": 0, "timesteps_total": 16000, "timesteps_this_iter": 0, "agent_timesteps_total": 16000, "timers": {"sample_time_ms": 49157.295, "sample_throughput": 81.371, "load_time_ms": 2.913, "load_throughput": 1373296.171, "learn_time_ms": 3999.123, "learn_throughput": 1000.219}, "info": {"learner": {"default_policy": {"learner_stats": {"cur_kl_coeff": 0.44999998807907104, "cur_lr": 4.999999873689376e-05, "total_loss": 638.6043701171875, "policy_loss": -0.011565622873604298, "vf_loss": 638.6130981445312, "vf_explained_var": 0.16065333783626556, "kl": 0.006244865711778402, "entropy": 0.5856912732124329, "entropy_coeff": 0.0, "model": {}}, "custom_metrics": {}}}, "num_steps_sampled": 16000, "num_agent_steps_sampled": 16000, "num_steps_trained": 16000, "num_agent_steps_trained": 16000, "num_steps_trained_this_iter": 0}, "done": false, "episodes_total": 0, "training_iteration": 4, "trial_id": "57526_00000", "experiment_id": "994aa06470444e9685b6fad672b7500c", "date": "2021-12-22_17-45-08", "timestamp": 1640213108, "time_this_iter_s": 47.776912450790405, "time_total_s": 200.38353824615479, "pid": 305610, "hostname": "john-desktop", "node_ip": "192.168.0.193", "config": {"num_workers": 0, "num_envs_per_worker": 1, "create_env_on_driver": false, "rollout_fragment_length": 1000, "batch_mode": "truncate_episodes", "gamma": 0.99, "lr": 5e-05, "train_batch_size": 4000, "model": {"_use_default_native_models": false, "_disable_preprocessor_api": false, "fcnet_hiddens": [256, 256], "fcnet_activation": "tanh", "conv_filters": null, "conv_activation": "relu", "post_fcnet_hiddens": [], "post_fcnet_activation": "relu", "free_log_std": false, "no_final_linear": false, "vf_share_layers": false, "use_lstm": false, "max_seq_len": 20, "lstm_cell_size": 256, "lstm_use_prev_action": false, "lstm_use_prev_reward": false, "_time_major": false, "use_attention": false, "attention_num_transformer_units": 1, "attention_dim": 64, "attention_num_heads": 1, "attention_head_dim": 32, "attention_memory_inference": 50, "attention_memory_training": 50, "attention_position_wise_mlp_dim": 32, "attention_init_gru_gate_bias": 2.0, "attention_use_n_prev_actions": 0, "attention_use_n_prev_rewards": 0, "framestack": true, "dim": 84, "grayscale": false, "zero_mean": true, "custom_model": null, "custom_model_config": {}, "custom_action_dist": null, "custom_preprocessor": null, "lstm_use_prev_action_reward": -1}, "optimizer": {}, "horizon": null, "soft_horizon": false, "no_done_at_end": false, "env": null, "observation_space": "Box([-inf -inf -inf -inf], [inf inf inf inf], (4,), float32)", "action_space": "Discrete(2)", "env_config": {}, "remote_worker_envs": false, "remote_env_batch_wait_ms": 0, "env_task_fn": null, "render_env": false, "record_env": false, "clip_rewards": null, "normalize_actions": true, "clip_actions": false, "preprocessor_pref": "deepmind", "log_level": "INFO", "callbacks": "<class 'ray.rllib.agents.callbacks.DefaultCallbacks'>", "ignore_worker_failures": false, "log_sys_usage": true, "fake_sampler": false, "framework": "tf", "eager_tracing": false, "eager_max_retraces": 20, "explore": true, "exploration_config": {"type": "StochasticSampling"}, "evaluation_interval": null, "evaluation_num_episodes": 10, "evaluation_parallel_to_training": false, "in_evaluation": false, "evaluation_config": {}, "evaluation_num_workers": 0, "custom_eval_function": null, "sample_async": false, "sample_collector": "<class 'ray.rllib.evaluation.collectors.simple_list_collector.SimpleListCollector'>", "observation_filter": "NoFilter", "synchronize_filters": true, "tf_session_args": {"intra_op_parallelism_threads": 2, "inter_op_parallelism_threads": 2, "gpu_options": {"allow_growth": true}, "log_device_placement": false, "device_count": {"CPU": 1}, "allow_soft_placement": true}, "local_tf_session_args": {"intra_op_parallelism_threads": 8, "inter_op_parallelism_threads": 8}, "compress_observations": false, "collect_metrics_timeout": 180, "metrics_smoothing_episodes": 100, "min_iter_time_s": 0, "timesteps_per_iteration": 0, "seed": null, "extra_python_environs_for_driver": {}, "extra_python_environs_for_worker": {}, "num_gpus": 0, "_fake_gpus": false, "num_cpus_per_worker": 1, "num_gpus_per_worker": 0, "custom_resources_per_worker": {}, "num_cpus_for_driver": 1, "placement_strategy": "PACK", "input": "<function _input at 0x7f9f472deaf0>", "input_config": {}, "actions_in_input_normalized": false, "input_evaluation": [], "postprocess_inputs": false, "shuffle_buffer_size": 0, "output": null, "output_compress_columns": ["obs", "new_obs"], "output_max_file_size": 67108864, "multiagent": {"policies": {"default_policy": [null, null, null, {}]}, "policy_map_capacity": 100, "policy_map_cache": null, "policy_mapping_fn": null, "policies_to_train": null, "observation_fn": null, "replay_mode": "independent", "count_steps_by": "env_steps"}, "logger_config": null, "_tf_policy_handles_more_than_one_loss": false, "_disable_preprocessor_api": false, "simple_optimizer": false, "monitor": -1, "use_critic": true, "use_gae": true, "lambda": 1.0, "kl_coeff": 0.2, "sgd_minibatch_size": 128, "shuffle_sequences": true, "num_sgd_iter": 30, "lr_schedule": null, "vf_loss_coeff": 1.0, "entropy_coeff": 0.0, "entropy_coeff_schedule": null, "clip_param": 0.3, "vf_clip_param": 10.0, "grad_clip": null, "kl_target": 0.01, "vf_share_layers": -1}, "time_since_restore": 200.38353824615479, "timesteps_since_restore": 0, "iterations_since_restore": 4, "perf": {"cpu_util_percent": 12.361764705882353, "ram_util_percent": 25.00441176470588}}

{"episode_reward_max": NaN, "episode_reward_min": NaN, "episode_reward_mean": NaN, "episode_len_mean": NaN, "episode_media": {}, "episodes_this_iter": 0, "policy_reward_min": {}, "policy_reward_max": {}, "policy_reward_mean": {}, "custom_metrics": {}, "hist_stats": {"episode_reward": [], "episode_lengths": []}, "sampler_perf": {}, "off_policy_estimator": {}, "num_healthy_workers": 0, "timesteps_total": 20000, "timesteps_this_iter": 0, "agent_timesteps_total": 20000, "timers": {"sample_time_ms": 48806.29, "sample_throughput": 81.957, "load_time_ms": 2.819, "load_throughput": 1419056.061, "learn_time_ms": 3979.531, "learn_throughput": 1005.144}, "info": {"learner": {"default_policy": {"learner_stats": {"cur_kl_coeff": 0.44999998807907104, "cur_lr": 4.999999873689376e-05, "total_loss": 586.5557861328125, "policy_loss": -0.012883122079074383, "vf_loss": 586.56591796875, "vf_explained_var": 0.2517547309398651, "kl": 0.006063793320208788, "entropy": 0.5621259212493896, "entropy_coeff": 0.0, "model": {}}, "custom_metrics": {}}}, "num_steps_sampled": 20000, "num_agent_steps_sampled": 20000, "num_steps_trained": 20000, "num_agent_steps_trained": 20000, "num_steps_trained_this_iter": 0}, "done": false, "episodes_total": 0, "training_iteration": 5, "trial_id": "57526_00000", "experiment_id": "994aa06470444e9685b6fad672b7500c", "date": "2021-12-22_17-45-55", "timestamp": 1640213155, "time_this_iter_s": 47.30190563201904, "time_total_s": 247.68544387817383, "pid": 305610, "hostname": "john-desktop", "node_ip": "192.168.0.193", "config": {"num_workers": 0, "num_envs_per_worker": 1, "create_env_on_driver": false, "rollout_fragment_length": 1000, "batch_mode": "truncate_episodes", "gamma": 0.99, "lr": 5e-05, "train_batch_size": 4000, "model": {"_use_default_native_models": false, "_disable_preprocessor_api": false, "fcnet_hiddens": [256, 256], "fcnet_activation": "tanh", "conv_filters": null, "conv_activation": "relu", "post_fcnet_hiddens": [], "post_fcnet_activation": "relu", "free_log_std": false, "no_final_linear": false, "vf_share_layers": false, "use_lstm": false, "max_seq_len": 20, "lstm_cell_size": 256, "lstm_use_prev_action": false, "lstm_use_prev_reward": false, "_time_major": false, "use_attention": false, "attention_num_transformer_units": 1, "attention_dim": 64, "attention_num_heads": 1, "attention_head_dim": 32, "attention_memory_inference": 50, "attention_memory_training": 50, "attention_position_wise_mlp_dim": 32, "attention_init_gru_gate_bias": 2.0, "attention_use_n_prev_actions": 0, "attention_use_n_prev_rewards": 0, "framestack": true, "dim": 84, "grayscale": false, "zero_mean": true, "custom_model": null, "custom_model_config": {}, "custom_action_dist": null, "custom_preprocessor": null, "lstm_use_prev_action_reward": -1}, "optimizer": {}, "horizon": null, "soft_horizon": false, "no_done_at_end": false, "env": null, "observation_space": "Box([-inf -inf -inf -inf], [inf inf inf inf], (4,), float32)", "action_space": "Discrete(2)", "env_config": {}, "remote_worker_envs": false, "remote_env_batch_wait_ms": 0, "env_task_fn": null, "render_env": false, "record_env": false, "clip_rewards": null, "normalize_actions": true, "clip_actions": false, "preprocessor_pref": "deepmind", "log_level": "INFO", "callbacks": "<class 'ray.rllib.agents.callbacks.DefaultCallbacks'>", "ignore_worker_failures": false, "log_sys_usage": true, "fake_sampler": false, "framework": "tf", "eager_tracing": false, "eager_max_retraces": 20, "explore": true, "exploration_config": {"type": "StochasticSampling"}, "evaluation_interval": null, "evaluation_num_episodes": 10, "evaluation_parallel_to_training": false, "in_evaluation": false, "evaluation_config": {}, "evaluation_num_workers": 0, "custom_eval_function": null, "sample_async": false, "sample_collector": "<class 'ray.rllib.evaluation.collectors.simple_list_collector.SimpleListCollector'>", "observation_filter": "NoFilter", "synchronize_filters": true, "tf_session_args": {"intra_op_parallelism_threads": 2, "inter_op_parallelism_threads": 2, "gpu_options": {"allow_growth": true}, "log_device_placement": false, "device_count": {"CPU": 1}, "allow_soft_placement": true}, "local_tf_session_args": {"intra_op_parallelism_threads": 8, "inter_op_parallelism_threads": 8}, "compress_observations": false, "collect_metrics_timeout": 180, "metrics_smoothing_episodes": 100, "min_iter_time_s": 0, "timesteps_per_iteration": 0, "seed": null, "extra_python_environs_for_driver": {}, "extra_python_environs_for_worker": {}, "num_gpus": 0, "_fake_gpus": false, "num_cpus_per_worker": 1, "num_gpus_per_worker": 0, "custom_resources_per_worker": {}, "num_cpus_for_driver": 1, "placement_strategy": "PACK", "input": "<function _input at 0x7f9f882e6280>", "input_config": {}, "actions_in_input_normalized": false, "input_evaluation": [], "postprocess_inputs": false, "shuffle_buffer_size": 0, "output": null, "output_compress_columns": ["obs", "new_obs"], "output_max_file_size": 67108864, "multiagent": {"policies": {"default_policy": [null, null, null, {}]}, "policy_map_capacity": 100, "policy_map_cache": null, "policy_mapping_fn": null, "policies_to_train": null, "observation_fn": null, "replay_mode": "independent", "count_steps_by": "env_steps"}, "logger_config": null, "_tf_policy_handles_more_than_one_loss": false, "_disable_preprocessor_api": false, "simple_optimizer": false, "monitor": -1, "use_critic": true, "use_gae": true, "lambda": 1.0, "kl_coeff": 0.2, "sgd_minibatch_size": 128, "shuffle_sequences": true, "num_sgd_iter": 30, "lr_schedule": null, "vf_loss_coeff": 1.0, "entropy_coeff": 0.0, "entropy_coeff_schedule": null, "clip_param": 0.3, "vf_clip_param": 10.0, "grad_clip": null, "kl_target": 0.01, "vf_share_layers": -1}, "time_since_restore": 247.68544387817383, "timesteps_since_restore": 0, "iterations_since_restore": 5, "perf": {"cpu_util_percent": 12.397014925373133, "ram_util_percent": 25.486567164179107}}

```

The learning itself is converging, however.

I've had a go at debugging this. In ray/rllib/agents/trainer.py on line 920, `step_results = next(self.train_exec_impl)` definitely returns a dict with all of those NaNs. Step in further I get confused by all of the recursion in ray/util/iter.py, and have not been able to find the root cause yet.

### Versions / Dependencies

python 3.8 OR python 3.9

ray 1.9

ubuntu 20.04

tensorflow 2.4

### Reproduction script

ray/rllib/examples/serving/cartpole_server.py --local-mode --num-workers=0

ray/rllib/examples/serving/cartpole_client.py --inference-mode=remote

### Anything else

_No response_

### Are you willing to submit a PR?

- [ ] Yes I am willing to submit a PR!