How severe does this issue affect your experience of using Ray?

- None: Just asking a question out of curiosity

Hi!

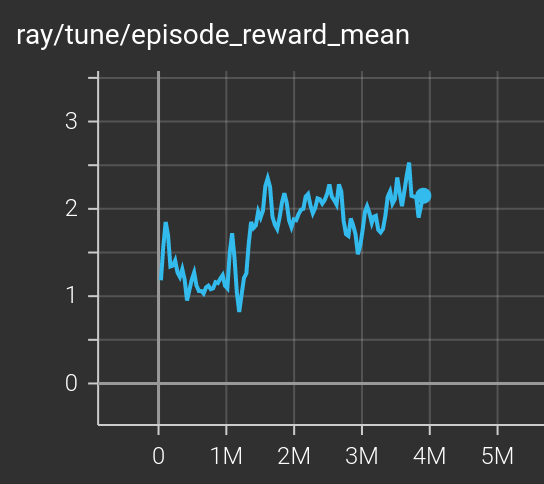

I have been trying for the last couple of days to train pong using PPO using the tuned example pong-ppo.yaml from ray release 2.4. Correct me if I am wrong, but I think the file has an issue regarding the rollout_fragment_length. It seems a constraint from algorithm_config.py:2850 raises an exception as rollout_fragment_length * num_rollout_workers * num_envs_per_worker does not match batch_size. I have tried several values which I can’t remember, but all in the low range (<~50), and I still could not do better than -19 after 50M steps (note that I should have stopped way before but I am a noob on this topic). Following a moment of inspiration, I took the atari-ppo.yaml as a comparison. I then changed rollout_fragment_length to match the one from atari i.e. 100 instead of 20 and removed full_action_space and repeat_action_probability

It is doing much better now. So for the questions:

1/ Can you confirm the rollout_fragment_length is equivalent to the number of frames (or steps) seen by the learner ? Could it be that 20 was enough in the original config because of some frameskip ?

2/ I would like to confirm the minimum number of fragment length as an exercise for hyperparameter tuning. Is there a way (like an util script) to export yaml to python config to make sure the baseline of params are the same ?

pong-ppo:

env: ALE/Pong-v5

run: PPO

config:

# Works for both torch and tf.

framework: torch

...

=>

config = ( # 1. Configure the algorithm,

PPOConfig()

.environment("ALE/Pong-v5")

.framework("torch")

...

Thanks in advance!