Hi, everyone:

I am a beginner with Ray, and I’ve always aimed to make the most of my limited personal computing resources. This is probably one of the main reasons why I wanted to learn Ray and its libraries. Hmmmm, I believe many students and individual researchers share the same motivation.

After running some experiments with Ray Tune (all Python-based), I started wondering and wanted to ask for help (Any help would be greatly appreciated!  ):

):

- Is Ray still effective and efficient on a single machine?

- Is it possible to run parallel experiments on a single machine with Ray (Tune in my case)?

- Is my script set up correctly for this purpose?

- Anything I missed?

The story:

- My computing resources are very limited: single machine with a 12-core CPU and an RTX 3080 Ti GPU with 12GB of memory.

- My toy experiment doesn’t fully utilize the resource available: single execution costs 11% GPU Util and 300MiB /11019MiB.

- Theoretically it should be possible to perform 8-9 experiments concurrently for such toy experiments on such a machine.

- Naturally, I resorted to Ray, expecting it to help manage and run parallel experiments with different groups of hyperparameters.

- However, based on the script below, I don’t see any parallel execution, even though I’ve set

max_concurrent_trials in tune.run(). All experiments seem to run one by one, according to my observations. I don’t know how to fix my code to achieve proper parallelism so far.

- Below is my ray tune scripts (

ray_experiment.py)

import os

import ray

from ray import tune

from ray.tune import CLIReporter

from ray.tune.schedulers import ASHAScheduler

from Simulation import run_simulations # Trainable object in Ray Tune

from utils.trial_name_generator import trial_name_generator

if __name__ == '__main__':

ray.init() # Debug mode: ray.init(local_mode=True)

# ray.init(num_cpus=12, num_gpus=1)

print(ray.available_resources())

current_dir = os.path.abspath(os.getcwd()) # absolute path of the current directory

params_groups = {

'exp_name': 'Ray_Tune',

# Search space

'lr': tune.choice([1e-7, 1e-4]),

'simLength': tune.choice([400, 800]),

}

reporter = CLIReporter(

metric_columns=["exp_progress", "eval_episodes", "best_r", "current_r"],

print_intermediate_tables=True,

)

# scheduler = ASHAScheduler()

analysis = tune.run(

run_simulations,

name=params_groups['exp_name'],

mode="max",

config=params_groups,

resources_per_trial={"gpu": 0.25, "cpu": 10},

max_concurrent_trials=8,

# scheduler=scheduler,

storage_path=f'{current_dir}/logs/', # Directory to save logs

trial_dirname_creator=trial_name_generator,

trial_name_creator=trial_name_generator,

# resume="AUTO"

)

print("Best config:", analysis.get_best_config(metric="best_r", mode="max"))

ray.shutdown()

Hi libeskyi,

Welcome to the Ray community! It’s great to hear you’re trying things out

Is Ray still effective and efficient on a single machine?

I would say so, yea! It is designed to parallelize workloads and can utilize multiple cores on a single machine to run concurrent tasks. However, efficient resource allocation is key to maximizing performance. Ray can even run on computers that don’t have GPUs, it runs fine on my Macbook Pro too (even though it does run faster on GPUs).

Is it possible to run parallel experiments on a single machine with Ray (Tune in my case)?

Yes, it is possible to run parallel experiments on a single machine using Ray Tune. By default, Tune runs concurrent trials based on the number of CPUs available on your machine. However, inefficient resource allocation (e.g., allocating too many CPUs per trial) can limit this. Just try to make sure you’re doing the best you can with the number of CPUs you have.

Is my script set up correctly for this purpose?

From a glance it looks great but there are a few things that might be helpful in helping you optimize your setup.

In ray.init() you can specify what resources you want to use,

ray.init(num_cpus=12, num_gpus=1)

This might be helpful for figuring out your resource allocation.

Another thing I noted is that the setting resources_per_trial={"gpu": 0.25, "cpu": 10} might be a little high. Given your 12-core CPU, each trial requiring 10 CPUs would limit execution to a single trial at a time. If this is intended then go ahead though!

Anything I missed?

There’s a few more helpful things that I will link below in our docs and some general tips:

- Use

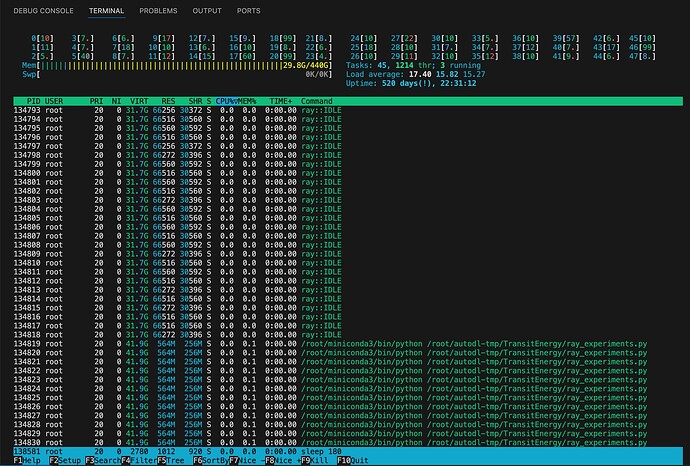

htop or watch -n 1 nvidia-smi to verify CPU/GPU utilization in real time.

- Implementing a scheduler like

ASHAScheduler can help optimize trial execution, especially when working with limited resources

Docs:

Hi Christina,

Thanks for your warm reply!

In fact, resources_per_trial={"gpu": 0.25, "cpu": 10} is my fault. I pasted the code carelessly, sorry for that. In my running code, I set it as resources_per_trial={"gpu": 0.25} which I expected Ray Tune can help me allocate the cpu automatically.

As you mentioned, I’d also tried to decline the total resources as ray.init(num_cpus=12, num_gpus=1) and also tried to set a relatively lower resource per trial in resources_per_trial={"gpu": 0.25, "cpu": 1} to ensure that parallel executions are possible.

However, all my attempts failed. According to the CLIReporter output in the terminal and the resource usage by watch -n 1 nvidia-smi, I still only can see all experiments are run one by one, the resource utilization with Ray Tune remains the same as when it’s not used.

Thanks and looking forward to team’s further guidance!

Hmm, okay, a few more things to try out to try and debug this:

- Ensure you’re not accidentally overriding your

ray.init() settings by calling it multiple times. Only one instance should initialize the resources.

- Try running Ray Debugger to see what’s going on (Using the Ray Debugger — Ray 2.42.0)

- Make sure Ray is aware of all resources by printing

ray.available_resources() after initialization to confirm it recognizes the full count of your CPU and GPU. Let me know what the output says.

- Can you send me what

ray status prints out, so we can monitor the cluster’s resource usage and ensure that resources are being allocated as expected. Additionally, are there any errors or warnings in ray status?

Another doc that might be helpful:

https://docs.ray.io/en/latest/tune/tutorials/tune-lifecycle.html#resource-management-in-tune

Hi, @christina, thanks for your kind assistance~

This is my latest ray parallel experiments script:

import os

import ray

from ray import tune

from ray.tune import CLIReporter

from ray.tune.schedulers import ASHAScheduler

from Simulation import run_simulations # Trainable object in Ray Tune

from utils.trial_name_generator import trial_name_generator

if __name__ == '__main__':

ray.init() # Debug mode: ray.init(local_mode=True)

print(ray.available_resources())

current_dir = os.path.abspath(os.getcwd()) # absolute path of the current directory

params_groups = {

'exp_name': 'Tuning_lr_batch',

# Search space

'lr': tune.loguniform(lower=1e-7, upper=1e-4), # sample in different orders of magnitude

'batch_size': tune.choice([512, 256]),

'simLength': tune.choice([2000, 4000]),

}

reporter = CLIReporter(

metric_columns=["exp_progress", "eval_episodes", "best_r", "current_r"],

print_intermediate_tables=True,

)

analysis = tune.run(

run_simulations,

name=params_groups['exp_name'],

mode="max",

config=params_groups,

resources_per_trial={"gpu": 0.25, "cpu": 4}, # Defaults to 1 CPU and 0 GPUs

max_concurrent_trials=8,

storage_path=f'{current_dir}/logs/', # Directory to save logs

trial_dirname_creator=trial_name_generator,

trial_name_creator=trial_name_generator,

# resume="AUTO"

)

print("Best config:", analysis.get_best_config(metric="best_r", mode="max"))

ray.shutdown()

Based on this script:

Thanks!

Hello!! Sorry for the late reply. I was out of office for a few days but I am back and catching up on threads.

I think this might be because you are on local_mode = True. I took a look at some older threads like this one: What is local mode?

It will run things in series instead of in parallel.

The mode LOCAL_MODE should be used if this Worker is a driver and if

you want to run the driver in a manner equivalent to serial Python for

debugging purposes. It will not send remote function calls to the

scheduler and will instead execute them in a blocking fashion.

Have you tried turning off local mode and seeing if that’ll help with the parallelism?

1 Like

![]() ):

):