First let me ask this: Does ASHAScheduler need checkpoints or could I just get rid of that checkpoint saving? I do save a ton of checkpoints apparently.

I really just want it to not write any files. Maybe you just want to test something, debug something or whatever. I mean there must be such an option no? Even if you implemented checkpointing.

I’m talking about the res_results directory.

Honestly, if it has to write one file per checkpoint or whatever then 100k doesn’t sound like much to me. But it’s too much for me since I just want to try out stuff and I can’t allow that kind of file system usage.

def main(config, checkpoint_dir=‘checkpoints’):

debug = False

if checkpoint_dir:

with open(os.path.join(checkpoint_dir, "checkpoint")) as f:

state = json.loads(f.read())

start = state["step"] + 1

# Parameters

learning_rate = config["learning_rate"]

batch_size = config["batch_size"]

momentum = config["momentum"]

epochs = 100

# Read train data

mutations_dataset = mutationsDataset(

csv_file='/cluster/home/user/iml21/task_3/data/train.csv')

# Get dataloaders

if debug == False:

train_dataloader, evaluation_dataloader = getDataloaders(

mutations_dataset, batch_size)

else:

epochs = 1000

# Choose a small subset

train_data = torch.utils.data.Subset(train_data, range(100))

train_dataloader = torch.utils.data.DataLoader(train_data)

evaluation_dataloader = train_dataloader

# Get model

model = NeuralNetwork(config["l1"], config["l2"], config["dropout1"], config["dropout2"]).to(device)

# Get loss function

loss_fn = nn.BCEWithLogitsLoss()

# Get optimizer

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate, betas=(0.9, 0.999))

#optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate, momentum=momentum)

# Epochs

for t in range(epochs):

print(f"\nEpoch {t+1}")

train(config, train_dataloader, model, loss_fn, optimizer, debug)

score = evaluation(config, evaluation_dataloader, model, debug)

# Obtain a checkpoint directory

with tune.checkpoint_dir(step=t) as checkpoint_dir:

path = os.path.join(checkpoint_dir, "checkpoint")

with open(path, "w") as f:

f.write(json.dumps({"step": t}))

tune.report(score=score)

print("Done!")

and

asha_scheduler = ASHAScheduler(

time_attr='training_iteration',

metric='score',

mode='max',

max_t=100,

grace_period=10,

reduction_factor=3,

brackets=1)

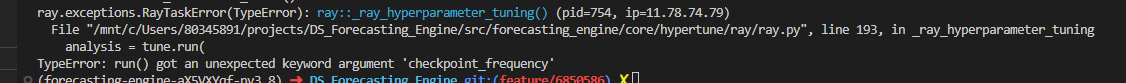

analysis = tune.run(

main,

config=params,

num_samples=20,

scheduler=asha_scheduler

)

oh and sure I can point it towards another folder but the max. file quota I have is 1 million and if I start a bunch of jobs wit ha lot of possible parameters it looks like ray tune would go crazy and just easily fill those 1mil quota.

I’m probably doing something wrong, I’m happy to learn what but as I said, the main question remains: Can I turn off the file writing?