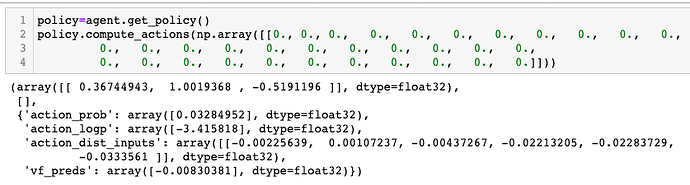

The action has 3 element. What does action_dist_inputs mean?

Thought it is [mean, std, low, high], but that doesn’t match the dimension.

Can someone help me? Thanks.

action_prob: The probability of the action that is selected. In a categorical distribution this would be the softmax value for that action.

action_logp: The log of the action_prob

action_dist_inputs: The logits coming from the last layer of the model. These are used as inout the the action distribution.

vf_preds:The value function estimate of the input state

Thanks a lot.

I am focusing on the continuous actions, such as using SAC. What do the values mean in the action_dist_inputs for continuous actions?

When I called the agent.compute_single_action() with unsquash_action=True, the actions are not normalized. However, when I call the agent.get_policy().model().compute_action() with unsquash_action=True, the actions are normalized.

How can I get the normalized actions back to non-normalized in batch?

I checked into the source code, for TorchSquashedGaussian, looks like it is [mean, log-std],

based on the functions _squash() and _unsquash(), I am able to recover the squashed to unsquashed actions values.