1、I’m encountering repeated warnings that nodes are being marked as dead due to missed heartbeats, even though the underlying infrastructure appears healthy:

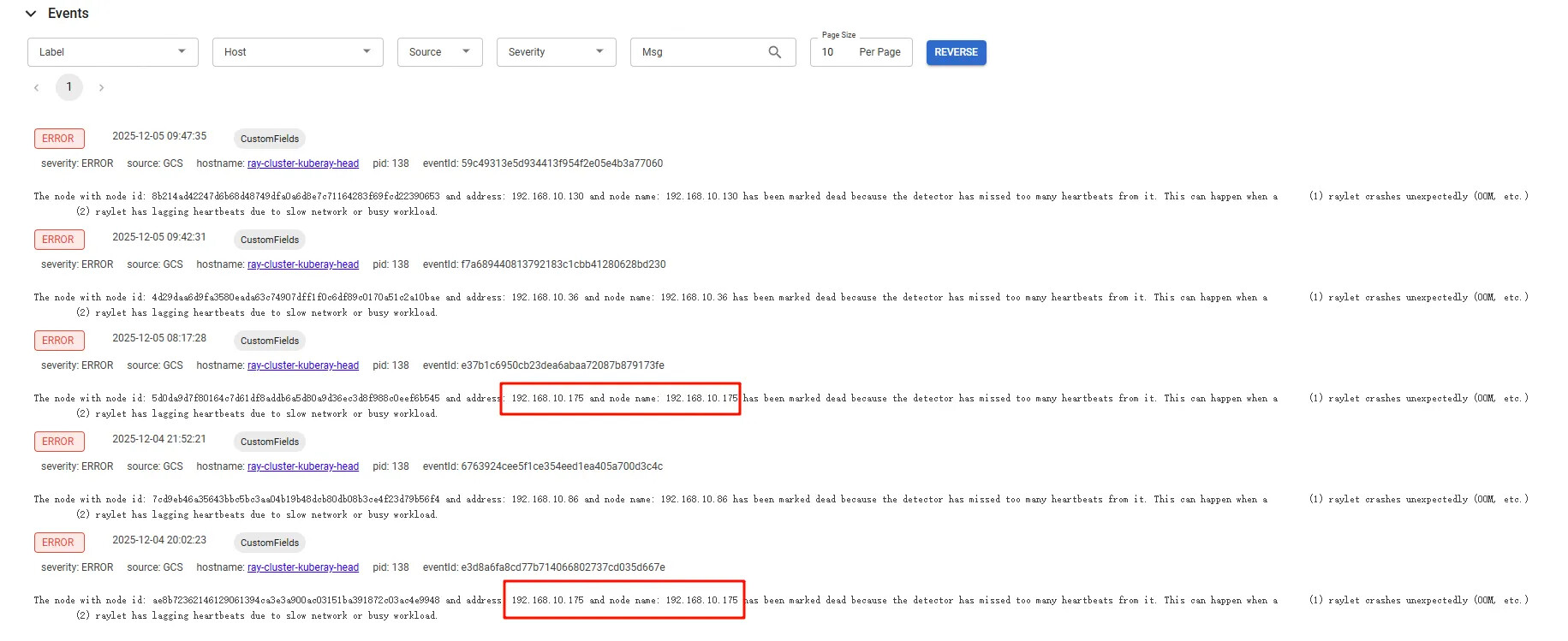

The node with node id: 5d0da9d7f80164c7d61df8addb6a5d80a9d36ec3d8f988c0eef6b545 and address: 192.168.10.175 and node name: 192.168.10.175 has been marked dead because the detector has missed too many heartbeats from it. This can happen when a (1) raylet crashes unexpectedly (OOM, etc.)

(2) raylet has lagging heartbeats due to slow network or busy workload.

Environment & Reproduction:

Network has been verified to be stable with no latency or packet loss.

The issue occurs in the following usage pattern:

Using ray.train to run a same training function (train_func) every 5 minutes.

Each invocation spawns a new worker on the Ray cluster to execute train_func.

Initially, everything runs smoothly for many iterations (hundreds of successful runs).

However, after the cluster accumulates significant metadata—e.g., hundreds of SUCCEEDED tasks, thousands of actors, and hundreds of dead nodes—the “node marked dead” error starts appearing.

Observed Behavior:

During job startup, Ray appears to attempt reconnecting to old IP addresses (e.g., 192.168.10.175) that belonged to workers from early runs and have long since been terminated and marked as dead.

This suggests that internal metadata (e.g., dead node or actor records) is not being properly cleaned up and may interfere with heartbeat monitoring or node discovery.

Mitigation Attempts:

I suspected that excessive caching of destroyed actors was contributing to the issue, so I set:

RAY_maximum_gcs_destroyed_actor_cached_count=1000 RAY_DASHBOARD_MAX_ACTORS_TO_CACHE=1000

However, the problem persists even with these limits in place.

kuberay-operator: v1.4.0

ray: 2.40.0

python: 3.10