1. Severity of the issue: (select one)

None: I’m just curious or want clarification.

Low: Annoying but doesn’t hinder my work.

Medium: Significantly affects my productivity but can find a workaround.

High: Completely blocks me.

2. Environment:

- Ray version: 2.49.2

- Python version: 3.10.18

- OS: Windows 11 24H2

- Cloud/Infrastructure: Local

- Other libs/tools (if relevant): PyTorch

3. What happened vs. what you expected:

I’ve been using tune.Tuner for a PPO training trial. After training finished, I would like to retrieve the best_checkpoint using the following lines:

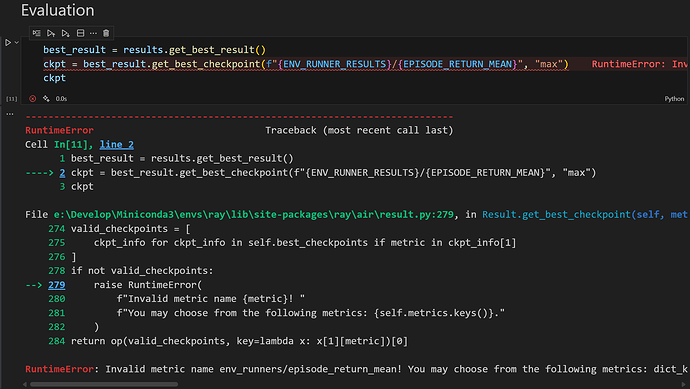

best_result = results.get_best_result()

ckpt = best_result.get_best_checkpoint(f"{ENV_RUNNER_RESULTS}/{EPISODE_RETURN_MEAN}", "max")

print(ckpt.path)

This works fine back in Ray 2.8.1 with metric “episode_reward_mean”. But now since all metrics are aggregated into “env_runners”, seems like Tune cannot find keys in nested metrics.

Hi Morphlng!

I think you’re right, env_runners/episode_return_mean may not be included at the top level for each checkpoint, especially if not reported in the first iteration or not present in every checkpoint’s metrics after they’ve been nested/ aggregated.

They talk a bit about it here in this GitHub issue: [Tune] Air output metrics are replaced by irrelevant inferred metrics · Issue #45547 · ray-project/ray · GitHub

For Ray Tune the metric would look like as follows "evaluation/env_runners/episode_return_mean".

Maybe you can try that and see if that works? Or do you have a list of available checkpoint metrics from Tune possibly? It’s cut off in the screenshot.

Thanks for the reply! I have tried to use Cartpole to reproduce the problem, and the full error message goes like:

RuntimeError: Invalid metric name env_runners/episode_return_mean! You may choose from the following metrics: dict_keys(['timers', 'env_runners', 'learners', 'num_training_step_calls_per_iteration', 'num_env_steps_sampled_lifetime', 'fault_tolerance', 'env_runner_group', 'done', 'training_iteration', 'trial_id', 'date', 'timestamp', 'time_this_iter_s', 'time_total_s', 'pid', 'hostname', 'node_ip', 'config', 'time_since_restore', 'iterations_since_restore', 'perf', 'experiment_tag']).

I’ve also tried to use air.Result to load from disk, and it would work perfectly. This confused me a lot since according to the traceback, they are calling from the same function.

There is another thing I want to report and is related to this problem.

It seems like RLlib’s default result_dict does not contain the data needed for air.Result to read from, that is checkpoint_dir_name. So if you pass the directory to Result.from_path, it is likely to report that:

I have wrote a Callback to manually add this column to fix this problem. But It would be great if the Ray team could officially fix it.

class CheckpointCallback(RLlibCallback):

def on_train_result(

self,

*,

algorithm: "Algorithm",

metrics_logger: MetricsLogger = None,

result: dict,

**kwargs

):

if algorithm._storage:

algorithm._storage.current_checkpoint_index += 1

result["checkpoint_dir_name"] = algorithm._storage.checkpoint_dir_name

algorithm._storage.current_checkpoint_index -= 1

Hello! Thank you for surfacing this! Have you had time to file a GitHub issue for these problems, or should I do it?  I’ll file a ticket for our RLlib team if needed

I’ll file a ticket for our RLlib team if needed